Early on, in my misspent youth, I aspired to be both

good-looking and smart. So I read Vogue magazine when I could afford it,

looking for interesting ways to dress on my embarrassingly meager salary, so as

to attract interesting men. I didn’t get very far with this ambition, but I was

apparently attractive enough so that after my first divorce I dated highly

intelligent men with Good Prospects. As

it turns out, though, it might have been the other aspiration that attracted

them, rather than my questionable gorgeosity.

To foster the “smart” end of the equation, I pretentiously

read The New Yorker (again, when I could afford it), and made sure I picked up

the latest issue whenever I found myself flying home for a holiday. This act was only partly a sham, because I

really did enjoy many of the articles, and actually appreciated their

length. I read prose by Ursula LeGuin,

Pauline Kael, and others whose work I admired, while seatmates on the plane

read Time (articles in which were already getting shorter and shorter). If I

wanted a news magazine, I chose U. S. News and World Report, because it seemed

more heady—but also because the articles were more thorough than those of its

competitors.

Although my motives may not have been entirely pure, I

did learn a great deal, and was pointed toward interesting writers, topics, and

points of view. By shamming

intellectualism, I actually became an intellectual. I continued to pursue my part-time Ivy League

degree, and have enjoyed an intellectually challenging and rewarding life ever

since.

And so it was with some amusement that I added The New

Yorker to my Newsstand subscriptions on my iPad a couple of weeks ago. My one regret now, however, is that I’ll

probably never have the time to read everything I’d like to, now that my

interests are so much broader and deeper than they were during my callow years. I’ll probably have to

cancel it when I finally retire, because it’s awfully pricey—although its

digital features are quite wonderful—but for now I’ll use part of my Social

Security check to keep it going.

What brought all this to mind was an article by A. O. Scott

in the New York Times magazine (to which I also subscribe online), called “The Death of Adulthood in American Culture” (September 11, 2014). In it Scott

bemoans the deaths of “the last of the patriarchs” (in television shows like

The Sopranos, Breaking Bad, and Mad Men, none of which I have seen, although I

kind of wish I had watched Mad Men, and may yet someday). He doesn’t miss the sexist aspect of

patriarchal authority, but notes that in progressing beyond patriarchy “we have

also, perhaps unwittingly, killed off all the grownups.” He goes on to note the

popularity of “Young Adult” fiction among so-called grownups, between the ages

of 30 and 44. (Oddly enough, though, anything labeled "adult" fiction tends to be pornographic).

Now, while I’ll admit to being geekily fond of comic book

movies, I haven’t been able to bring myself to pick up copies of the Divergent

or Hunger Games books. I never did get into Harry Potter, either, beyond the

first chapter of the first book. The

plots and characters of all of these franchises are so familiar and so borrowed

from older, wiser works that I read while I was earning the aforesaid degree,

that I can only see reading them as an effort to get inside the heads of my

“young adult” students. And to them I always recommend Ursula LeGuin's books written for younger audiences, because she's pretty egalitarian in her treatment of her readers.

It took me eight

years to get my B.A., and during much of that time I was also working as an admin around scholars at Penn—many of

whom are now dead, but from whom I learned how to become (eventually) an adult.

So I find myself trying somewhat desperately (and perhaps pathetically) to find ways to lead my own students toward at least a few more worthy literary

endeavors. They will probably never read

War and Peace. But how about a bit of

Dickens?

The Beloved Spouse and I watched David Suchet’s tribute to Agatha Christie the other night on PBS and wondered at Christie’s ability to write

lucid, careful prose as a very young child.

It shouldn’t be all that surprising, once one is familiar with what children

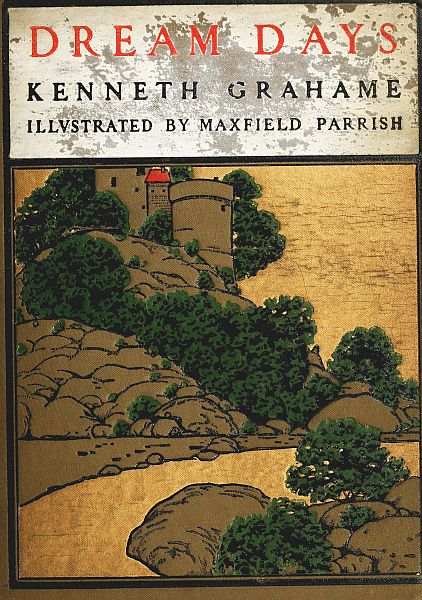

read in those days: Lewis Carroll’s Alice stories, J. M. Barrie’s Peter and Wendy, Kenneth Grahame’s Dream Days. The prose in these

“children’s” books is so rich, complex, and erudite that they’re well worth

reading by adults today—rather like some of the cartoons we watched as kids

(Rocky and Bullwinkle, anyone?) that amused both children and parents.

It seems a bit ironic to me that some of the animated films

out today offer more intellectual stimulation than do novels aimed at teenagers.

But The Box Trolls, which is amazingly animated and richly written, is far less

juvenile than what I’ve seen of the Harry Potter movies. I’m not talking here

about the Disney princess movies (however engaging they might be) that make me

happy I don’t have a small daughter today and don’t have to deal with the

princess phase. Rather, it’s Lemony Snicket and Despicable Me that seem to

harken back to Kenneth Grahame’s hilarious, luminous story, “Its Walls Were

Made of Jasper.” I tried reading the latter to my Art History students when I

was teaching about manuscript illumination. But they didn’t get it: too many

unfamiliar words.

Scott reminds us in his essay that Huckleberry Finn is

commonly relegated to the children’s section of libraries, and that’s about as

close as most of today’s students get to literary depth. Numerous attempts to ban it suggest its

potential power to do just what Socrates died for: corrupt the minds of the

young by teaching them how to think. I’m pretty sure they don’t read Moby Dick

anymore, and if they get any Shakespeare at all, it’s Romeo and Juliet.

Another irony exists in all this, and it has to do with J.

M. Barrie. Peter Pan didn’t want to grow

up. The Disneyfied Peter Pan never did, and he lives on in popular culture’s

reluctance to offer few if any grownup models.

In the end, Scott’s essay has explained to me why I watch so few television

series today, and perhaps why most of the contemporary literature I read

is science fiction or works about science.

I’ve long held that some of the most compelling prose comes wrapped in

covers that depict galaxies far away.

One of the first science fiction novels I ever read was Wilmar Shiras’s

Children of the Atom, which sparked an interest in science and speculative

fiction that hasn’t left me even in my dotage.

That particular novel probably inspired the X-men comics and characters,

and although aimed at the young, treated its readers as if they were—at least

potentially—adults.

Image credit: The cover of Kenneth Grahame's Dream Days, illustrated by Maxfield Parrish, via Wikimedia Commons.